AI doesn’t make it much easier to build security startups

A somehow contrarian take on how AI impacts the ability to build security companies

There are many discussions about how AI is changing the way the cybersecurity industry operates, and I am certainly the last person to argue with this thought. At the same time, I have developed the perspective that for startups, it doesn’t change the game as much as many assume it does. Before I lose you completely, let me explain.

For this conversation to make sense, I think we need to separate two lines of thought: what AI enables for customers, and what AI solves for startups. These are two very different conversations, and while I want to focus the article on the latter, it won’t fully make sense if I don’t briefly address the former.

This issue is brought to you by… Harmonic Security.

Early Access Open: MCP Gateway with Intelligent Data Controls

Agentic AI is moving fast and most teams have no visibility into what’s actually happening.

Harmonic’s MCP Gateway changes that.

It’s a lightweight, developer-friendly gateway that gives security teams visibility into MCP usage and the ability to set real controls, blocking risky clients or data flows before something slips through.

We’re opening early access to a limited number of forward-leaning security teams. Request early access for your team here:

For customers, AI is transforming how security is done

Over the past year, it has become clear to me that AI is already transforming how security is done. Now, this is not because LLMs are perfect at detection, or that AI has no gaps (they aren’t, and it does). A much more important reason why I am bullish on the opportunities this wave of AI unlocks is simple. Well over 90% (and some people would even say 95-97%) of security teams’ day-to-day is not some advanced incident response or dealing with nation-states. Most of the security teams’ work has nothing to do with chasing advanced adversaries. Much more than that, it’s boring, mundane stuff like:

Updating reports and dashboards for leadership

Collecting screenshots and evidence for audits

Responding to repetitive access and compliance requests

Reconciling data across tools and systems

Investigating low-priority alerts that never amount to much

Documenting findings and closing out endless tickets

I previously wrote a dedicated deep dive about this if you are interested in reading more: Most of the security teams’ work has nothing to do with chasing advanced adversaries.

The main point here is that all this manual stuff is exactly the kind of work that lends itself to automation opportunities, and where AI agents can make a lot of difference. As an industry, I think we should be excited to use AI to streamline or eliminate the time-consuming but necessary work, and buy ourselves time to focus on the high-value work that we simply can’t get to. This is what scaling security teams means and I am very optimistic about the opportunities this creates, for both enterprises and startups.

The impact of AI for security startups is trickier to evaluate

When it comes to what AI enables for security startups, my opinion diverges from a lot of the stuff I read online. I’ve seen some people suggest that AI makes it possible to quickly validate demand, to radically shorten time to market, and even to avoid having to hire senior engineering talent. Some even think that enterprises will replace all of their expensive SaaS subscriptions with homegrown agentic solutions. I don’t quite agree with either of these statements.

AI doesn’t really make it significantly easier to validate demand in security

I am a huge fan of AI prototyping tools (V0 is one of my favorites, but if you have suggestions for even better ones, I’d love to hear them). Prototyping makes it possible to iterate on designs in minutes and to generate good enough working prototypes for testing with prospects. They are fantastic. So why do I then say that AI doesn’t really make it significantly easier to validate demand in security? The answer is pretty basic: because getting feedback about prototypes is pretty far from real demand validation.

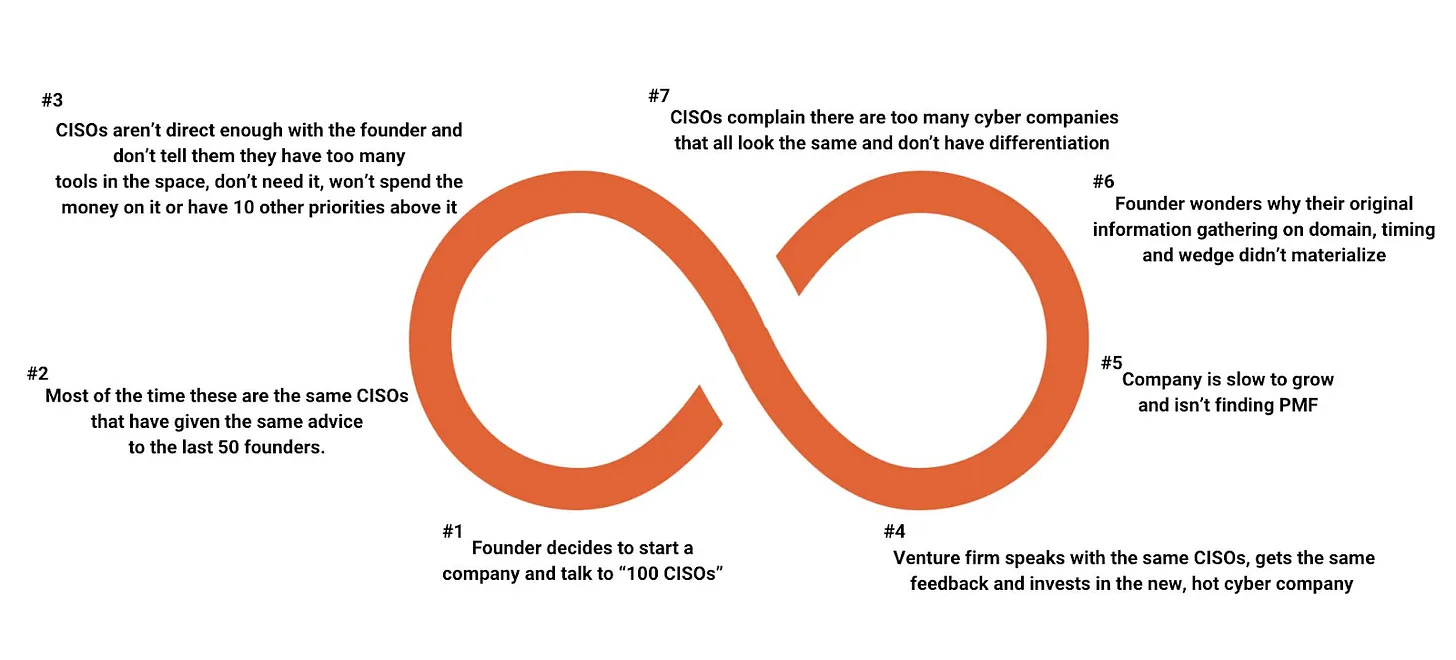

I recently wrote an article titled The real dilemmas of cybersecurity startup ideation, discovery, and validation where I expanded on some thoughts shared online by Stephen Ward and referenced an amazing illustration of the ideation journey he shared on LinkedIn.

Image source: Stephen Ward

What I am talking about here is different. It is more about the fact that real validation in B2B only really comes when someone is writing a check. Ideally you want this someone to not have any incentives to be doing this, aside from wanting to solve a painful problem at their company.

People will always be willing to share their perspectives, and having a visual illustration of an idea is very, very important. AI prototyping tools can help gather feedback, learn about the problem, and even plan what capabilities should be prioritized first based on user feedback. The one thing prototypes do a poor job at is validating that someone is going to pay real money (based on my observations, anyway).

AI doesn’t significantly expedite go-to-market in security

I have heard many people in the industry say things like “Now that I can ship new features much faster, I can scale with 3x-10x speed”. In other markets - looks like it, in consumer space - for sure, but in security, not so much.

It is true that founders can now ship new features faster, but the biggest obstacle to fast growth in security has never been the speed of shipping new features. The number one obstacle security founders have to grapple with is years-long sales cycles, and that isn’t changing anytime soon.

Andrew Peterson of Aviso Ventures, and formerly founder & CEO of Signal Sciences, put it really, really well in his post on LinkedIn: “AI is changing how fast you can build products and features but it isn’t changing how slow sales cycles are in security. This will continue to hamper the speed of growth in infosec despite the increased speed to build features.

You might think the costs of the products could go down a ton and drive speed of adoption but the price of security products have almost always been driven by sales and marketing costs, not dev costs or gross margins (that tend to be legendarily good in security).

Until we see sales cycles improve for security, we’re gonna be stuck with slow adoption curves for the time being. How I’d expect to see this change is a longer convo but short version is I don’t. Mainly because security relies on eng for install of meaningful controls and they’re rarely a priority (despite being easier and easier to actually implement).

All this said the growth of security start ups have always been strong and I predict will continue to be cause of constant new opportunities and innovation. I just don’t predict the growth to accelerate cause of AI despite expecting this in other sectors.”

I don’t think I can add much to this rather perfect summary of what I’ve also been seeing. If a feature that could take 9 months to ship now takes 6 months, that’s a dramatic improvement (30% shorter). But if the same feature takes a year to sell, then suddenly the math is different. What would usually take 1 year 9 months from concept to first paying customer can now take 1 year 6 months. In reality, it can be even longer because security teams afraid of risks introduced by AI-generated code are likely to extend their evaluation periods and ask to see more architectural diagrams and so on. We may cut time on writing software, but if the sales cycles remain the same or even become longer, we aren’t going to see radical differences in GTM speed.

AI doesn’t make it possible to avoid hiring senior engineering talent

Then there’s the question about talent. I’ve heard from several people that with tools like Claude Code, companies can hire fewer senior engineers and instead startups can attract young and hungry talent eager to use new tools. As someone smart once said, in theory, there is no difference between theory and practice, but in practice, there is. This very much applies when it comes to developers and AI-generated code.

My thought process is simple: from first principles, not much has changed, and most likely, not much will:

People who are hungry to learn and grow and do new things, but who don’t have solid experience, will, over time, outperform those who have experience but are much less motivated to learn and grow. This was the case before AI, and there will be no changes with AI. AI will only amplify the difference between motivated and non-motivated engineers. It’s 2025 and any software engineer that “didn’t have the time” to get started with AI coding assistants yet is probably on their way out of the industry.

People humble enough to admit they don’t have all the answers and smart enough to always look for shortcuts will, over time, outperform those who have more answers but feel like looking for shortcuts hurts their ego or makes them lesser. This was true before AI, when some devs were just “too good” to go to Stack Overflow or to just ask their colleagues for help and would waste hours trying to do things themselves. AI just makes the difference between two kinds of engineers more stark.

People who embrace new tech and new ways of working will always outperform those who don’t. There were plenty of developers who were against cloud, against agile, against shipping in smaller increments, against shipping continuously, etc. History has proven them all wrong big time. I don’t think it will be any different with AI: developers who don’t embrace it will go the same way as those who didn’t want to embrace the cloud.

Experience will continue to matter when it comes to building for scale and building for deterministic precision. It’s one thing to build a service, and it’s another thing to build a service that will perform at enterprise scale.

Domain expertise will continue to matter even more in a world where people think they can just AI their way into areas they don’t know anything about.

Companies that hire a bunch of engineers and ask them to use Claude to generate code without establishing real guardrails to make sure the quality of that code is solid will drown in technical debt before they close their first paying customer. In my opinion, senior engineers without AI tools are simply too slow and too expensive; junior engineers with no experience but drive and AI coding tools are likely to break too many things and create mountains of tech debt. The best answer is to hire great engineers and to give them all the latest and greatest tools. This does, however, mean that attracting amazing talent is as critical today as it was before (or arguably even more!).

Another interesting thing people miss is that AI is making building software harder, not easier. Mrinal Wadhwa articulated that thought several weeks ago: “Most software, until now, was focused on forms. Most software engineers spent their careers building 3-tier web apps. Products with agents, in contrast, are stochastic; each request has a long lifecycle; communication happens over bi-directional streams of messages; state is distributed across agents; etc. This is harder engineering. Sure, generating code has gotten easier, but to build reliable products that scale and are secure we now need much more complex architectures.” I recommend listening to this short 7-minute snippet because it offers a good perspective as to why strong engineering talent is even more critical today compared to several years ago.

AI isn’t going to kill security products anytime soon

Over the past several months, I have heard some people say, “I can now vibe code most of the security products over the weekend”, predicting that the wave of vibe coding will lead enterprises to stop buying SaaS. In my opinion, this could not be further away from the truth.

People who believe enterprises buy software only because they can’t build it in-house fundamentally misunderstand how large organizations operate. Open source has been around for decades, so why do enterprises still spend millions on freely available software? Because at their scale, the real cost isn’t in per-seat licenses; it’s in maintenance, reliability, and support.

Once a company reaches 5,000 employees (and definitely beyond 25,000), it needs scalable systems of ownership, maintenance, and accountability. If everyone just vibe coded their own tools and moved on, the whole structure would collapse. Every large enterprise already has a few internal tools whose creators have left (or even passed away), leaving behind fragile systems no one is brave enough to touch. That’s exactly why they choose to buy, not build, and that’s why they are so careful about throwing AI at problems.

Another aspect of security is that it is all about depth. Sure, anyone can vibe code some high-level basic system that will ingest cloud configs and output some findings. That is indeed very easy. However, to make it work for enterprise scale, enterprise complexity, and enterprise environments, for that, no vibe coding will be enough. More critically, to identify risks in complex systems, security products have to be five inches wide and 10 feet deep, and that depth is something that comes from human expertise, research, and clear focus, not from telling Claude to write some “cloud detection logic”.

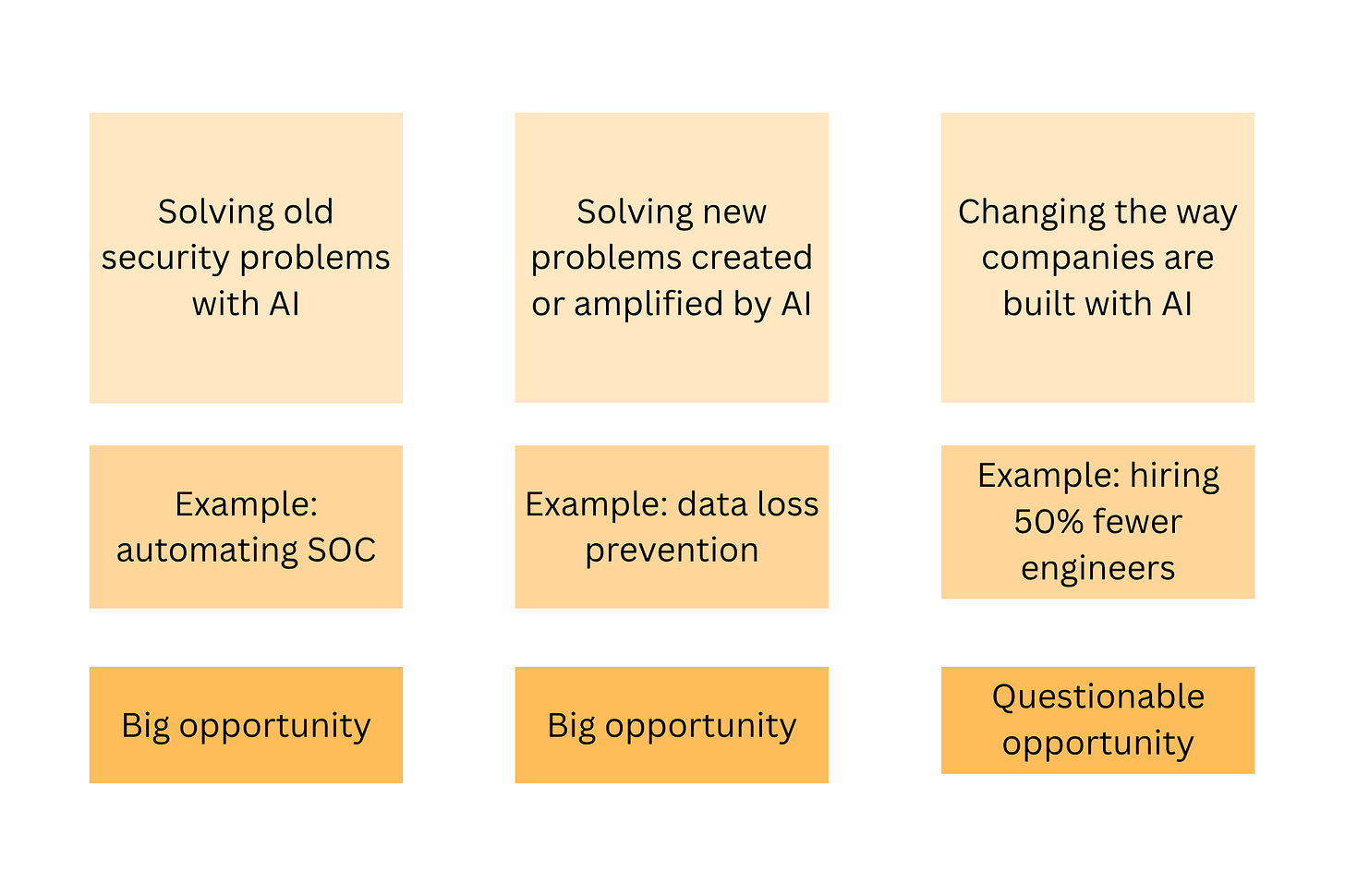

Closing thoughts: for startups, AI is a great amplifier, but it’s not a compensator

There are many discussions about how AI makes it drastically easier to build startups. It may be the case in other industries and market segments, but I don’t think it’s true for security. Let me be clear and reiterate that AI does enable startups to solve problems that were fundamentally unsolvable before. In addition, it amplifies a lot of the security problems our industry has been trying to solve (data security, SaaS security, third-party risk management, etc.). All this creates new possibilities, new problems, and therefore new markets and new opportunities.

However, what AI doesn’t do in my view is change how security companies are built. It does make some things like prototyping easier, but it doesn’t make a bad idea a good one, and it doesn’t make “this looks good” a real validation. AI makes great developers even more productive than before, but it doesn’t turn a junior developer into a senior, or an unmotivated developer into a 10x high-performing engineer. In other words, AI is a great amplifier, but it’s not a compensator.

Most importantly, AI doesn’t really change the fundamentals:

That security is about trust, and unpredictability of AI only increases the bar for developing trust.

That enterprise sales are about navigating people and complexity, and AI does little to reduce that complexity.

That building great products requires depth and expertise, and you cannot win by relying on what is literally the average of human knowledge.

Fundamentals for building a successful company don’t really change: a large market, a strong team, and ten million decisions that have to be made right, with the hope that the wrong decisions will be insignificant enough to matter.

This resonates deeply with what I see across industries. AI excels at optimizing execution, but it can't compensate for flawed strategy or replace deep domain expertise. Your point about 'amplifier vs compensator' is brilliant - it's exactly why focusing on core strengths while strategically leveraging AI for the rest remains the winning formula.

Amazing read