It’s complicated: wrapping up a year of excitement with AI in security and security for AI

Security for AI and AI in security from a bird's eye view

Welcome to Venture in Security! Before we begin, do me a favor and make sure you hit the “Subscribe” button. Subscriptions let me know that you care and keep me motivated to write more. Thanks folks!

There are so many articles on AI in security that I want to be very clear about the scope of this piece. My goal is to look at security for AI and AI in security from a meta-level, assessing whether or not AI security will follow the same adoption path as other security tools, if now is a good time to build AI security platforms, and speculating what startups that raised tens of millions dollars without much in terms of paying customers might be trying to do. Moreover, I will share some ideas surrounding the use of AI to solve fundamental security problems.

This article does not attempt to offer a list of specific security problems that can be solved with AI, nor is it a comprehensive overview of all ways in which LLMs can be attacked or used for malicious purposes. There are plenty of experts who have covered these two areas, and I don’t feel like the world needs another article that repeats what has been said by much smarter people already.

Looking at the VCs’ and founders’ excitement about the security of AI

Following the patterns learned from securing other technologies

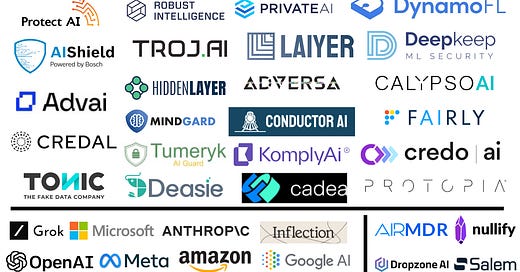

While there has been a lot of excitement about AI in security among entrepreneurs, this is even more true about VCs. Although most of the investment capital has been going to the model layer, the security of AI has seen its fair share of believers.

At first glance, so much VC excitement makes little sense. First, cybersecurity startups tend to run into a first-mover disadvantage when a company that is championing a new approach or securing a new attack vector has to spend a lot of time and money educating the market about it. This time, it appears to be different because everyone is talking about AI, and just over a year after ChatGPT came out, the governments in the US and Europe are trying to set guardrails around its use, so far with varied degrees of success. With the right team, the first-mover disadvantage can be outshined by the first-mover advantage, and the ability to do land grab in this new field.

Historically, the adoption of security followed the adoption of new technology by enterprises, with a lag of somewhere between three to five years. This cycle has been repeating itself with different frameworks and technologies. Take the cloud as an example. Not only has it taken companies many years before they were comfortable to consider adopting the cloud (some are hesitant until today), but during the first years of adoption few businesses had any understanding of what cloud security looks like, and what types of threats they should be concerned with. Moreover, it has taken early entrants over a decade to get to the point where we now have large platforms such as Wiz and Orca. Orca was founded in 2019, and Wiz a year after that. For context, this is over a decade and a half since AWS launched its first infrastructure service for public usage, Simple Queue Service (SQS). Even Lacework, another cloud security unicorn, started in 2015, just over a decade since AWS launched in 2004.

I have previously discussed the reasons why Wiz and Orca were able to build large platforms so quickly. To recap, here are the two factors that made it possible:

Since the cloud was designed to be infinitely scalable and API-first, the cloud providers have built powerful APIs. This made it possible for one company to implement most of the logic and build most of the components needed to secure the cloud. Each of the cloud providers - AWS, Azure, GCP, Digital Ocean, and others have the ability to extract data, so cloud security vendors just need a way to normalize it to the common format, and map similar concepts across different platforms.

By the time cloud security became a need, most concepts - from asset management to vulnerability management, detection & response, everything-as-code, etc. were already well understood. This means that the founders of cloud security companies were able to move fast and implement what was already built for other asset classes.

There is a known playbook in security, and cloud security is a perfect example of how it typically unfolds:

A new technology emerges. At first, companies are hesitant to adopt it, and it takes a few years before the critical mass becomes comfortable to experiment with new tech.

As the implementation of the new technology is underway, companies are optimistic about its potential to impact business operations. Security isn’t one of the major concerns; not because business doesn’t care but because it is unclear what the actual risks are.

As time goes by, it becomes clear that security is a real concern. Bad actors find and exploit vulnerabilities in the new technology, and security products that promise to address the issues appear.

As the market matures and the buyers become increasingly frustrated about the large number of security tools they need to buy to handle a single attack vector, a platform player emerges that unifies the required capabilities under one umbrella.

The opportunity for platform creation in AI security

If AI adoption were to follow the trajectory of cloud adoption, then it should be logical that the first generation of AI security companies is not where the most value is going to be created. The majority of businesses would take a few years before they become comfortable adopting the advancements of artificial intelligence. During that time, a large number of point solutions would appear, and somewhere in the next five or so years as we get a better understanding of what we need to do to secure AI, a platform player would emerge with a strong vision and unify a large number of disjoint security solutions.

I think this scenario is quite logical, intuitive, and well-articulated. The only challenge is that LLMs in their current state have already shattered everything we know about the adoption of security tools.

In one week, the whole world gained familiarity with the new technology, its potential, and the opportunities it can unlock. This is unlike the launch of the cloud and the evangelism it required to gain mainstream understanding and acceptance.

While businesses are indeed hesitant to implement the advancements of AI, employees are not waiting for their permission. A large percentage of people are starting to rely on ChatGPT to help them do their work, be it drafting emails, summarizing research, or writing software. With the cloud, a developer couldn’t just start using AWS without there being a concerted effort to shift the company’s infrastructure to the cloud; with AI, everyone is able to make their own decisions.

Although we still don’t know what attacks against AI we should be protecting ourselves against and the vast majority of threats are pretty theoretical, we have seen early signs of what that could look like. We have seen prompt injection in action, we have seen companies leaking their sensitive data, and several other problems in the wild.

The governments are already being vocal about the concerns surrounding AI and national security. Moreover, security teams are excited to follow the developments in this space knowing that AI has the potential to redefine the way we do security.

All this combined explains the main reason why VCs are so excited about AI security: they see securing AI as the next big opportunity for platform creation. In its blog post titled exactly that - “Securing AI Is The Next Big Platform Opportunity”, Lightspeed, one of the largest top-tier VCs explains:

“Every new technology presents huge opportunities for innovation and positive change; generative AI may be the single greatest example of that. But each step-function change in technology – an emergence of a new building block like AI for instance – results in a new set of challenges around security. We’ve seen this before: The rise of SaaS led to CASB (Cloud Access Security Broker) and Cloud Security companies such as Netskope and Zscaler. The rise of public cloud led to CSPM (Cloud Security Posture Management) companies such as Wiz. The increasing prevalence of AI is creating a similar opportunity: We expect the rise of a new category of platforms to secure AI.

At a CIO event we hosted recently in New York, we had around 200 IT decision makers in the audience. When asked if they had blocked ChatGPT access, a number of hands went up. This shows how quickly the intersection of cyber security and AI has become top of mind for IT leaders as compared to just a year ago, when ML model security was still a technical space.” - Source: Securing AI Is The Next Big Platform Opportunity

In their piece, Guru Chahal and Nnamdi Iregbulem put forward a compelling picture explaining why AI presents an opportunity for new platform creation, what some of the attack vectors in AI look like, and what the questions founders should be asking as they are thinking of building in AI security space. At this point, a large number of VC firms created some kind of AI-themed content to show that they are ready to talk AI.

Building a platform focused on AI security without understanding what the AI infrastructure will look like a year from now

Historically, by the time security companies started to get some traction, the underlying infrastructure they were promising to secure was more or less stable. Endpoint security as an area of focus emerged long after Microsoft Windows became the standard of personal workstations. Similarly, cloud security became a thing when AWS was already a leading provider (although Google and Microsoft quickly followed with their offerings).

What is rather interesting about securing AI is that we appear to be trying to figure out how to secure AI before we can understand what that “AI” is going to look like. For example,

It’s unclear who is going to be the winner in the AI space. OpenAI is surely the biggest and most promising company today, but there are many others such as Anthropic and Inflection, on top of open source models and a long list of other companies building their own AI models, infrastructure, and tools.

We don’t know to what extent companies like OpenAI will be able to take care of security. Will they embrace a shared responsibility model, similar to that used by cloud providers? Or, will they embed themselves much deeper and offer all the required security capabilities as a part of their core products, to differentiate against competition and expedite enterprise adoption? The OpenAI team, in particular, has been quick to learn what enterprises need. Since security, trust, and safety are some of the critical requirements for adoption, it remains to be seen to what extent core security capabilities will be built into the AI infrastructure, and what will need to be layered on top by external players.

These are just some of the questions; there are many more. AI security companies that are being built today are up for a rollercoaster ride. Fundamentally, they have two paths ahead: to pick a vision of the future they believe in and build for that vision, or to design a very flexible solution the value of which will remain strong regardless of how the future unfolds.

The first path is a gamble and one that can either result in great gains or a complete demise. Imagine you are a founder of a security startup in 2005 and you need to decide which platform to start with: SoftLayer Technologies or AWS. In 2005, the choice was tricky: say, you know that startups tend to outcompete initiatives by large enterprises, and go with SoftLayer. Now, fast forward to 2013 when SoftLayer became IBM Cloud, and you now know that your company is doomed. Or, say you believed that DigitalOcean, founded in 2012, would somehow become number one, and made your bet on it; if you did, you would probably be out of business by now. Today, DigitalOcean is reported to own about 2% of the cloud market share.

The second path, namely designing a very flexible solution the value of which will remain strong regardless of how the future unfolds, sounds great theoretically, but it remains just that - a theory. And, if you think that we can just ask McKinsey’s of this world what the future will hold, here is a story for you to ponder. “In the early 1980s AT&T asked McKinsey to estimate how many cellular phones would be in use in the world at the turn of the century. The consultancy noted all the problems with the new devices—the handsets were absurdly heavy, the batteries kept running out, the coverage was patchy and the cost per minute was exorbitant—and concluded that the total market would be about 900,000. At the time this persuaded AT&T to pull out of the market, although it changed its mind later.” - Source: The Economist. As we now know, the right answer to the question “How many cellular phones will be in use in the world at the turn of the century?” is not 900,000 (a total of 405 million mobile phones were sold worldwide in 2000 alone). I am sharing this story not to throw a rock at McKinsey or any other consultancy, but to illustrate that humans are generally quite bad at estimating and predicting the future.

Why we are seeing AI security players raise a lot of capital

Funding rounds used to mean something. The numbers of what constitutes a usual pre-seed, seed, Series A, and other rounds tend to vary depending on the economy and geography, but the concept of milestones typically stands:

Pre-seed is a funding round that provides a startup with enough capital to validate the problem-solution fit and invest in developing an early version of the product.

The seed round is typically when the startup invests more in product development going from beta to general availability. More importantly, the seed round is used to find the product-market fit and identify what go-to-market (GTM) motions make the most sense for the company. The intent is to find a GTM motion that will enable the company to scale.

Series A provides capital for scaling the company, validating the business model fit, and developing a repeatable go-to-market motion. Series B and beyond enable founders to accelerate growth and do the land grab capturing as much of the market as possible as quickly as they can.

Source: Series A, B, C Funding: How it Works

The original idea behind funding rounds is simple. Startups are risky, and only some of them will succeed. Instead of providing a lot of money early and seeing most companies go downhill, investors give founders capital in stages, expecting them to hit different milestones before they advance to the next stage. Early on, the metrics in question are blurry - it could be revenue, but it could also be product signups, letters of intent, GitHub stars, and whatever else can be used to prove traction. As the company advances, the only numbers that start to matter are revenue and growth rate.

When markets are hot, the desire of VCs to de-risk innovation and look for milestones before providing more capital goes out of the window. AI security today is exactly that kind of market: companies can go from pre-seed to seed to Series A in a matter of months and without showing any real revenue.

Both founders and investors have reasons to forget about stages of financing and do these deals where companies that have an early product and no material revenue numbers receive $50M to $100M in funding. Founders are looking for an opportunity to build AI security platforms. They know that once the market cools down and VCs realize that there are unlikely to be 50+ “platforms” out there, it will become harder to raise money. What they also know is that nobody can predict how the whole AI security journey is going to unfold, and how long it will take for customers to start adopting and paying for AI security tools. What smart founders are, however, able to foresee is that securing a few years of runway will give them the time to figure stuff out. The challenge is that having a lot of money sitting in the bank account often spoils startups and encourages them to spend more than they need, and as a result increases dilution and takes them further away from finding product-market fit. Investors, on the other hand, see an opportunity for platform creation. Given that AI is going to shape the direction of our future, and will be embedded into every single tool we rely on, the returns in securing AI are going to be massive. The opportunity in AI security could be so big that $100M rounds, while they may look like a lot of money, are peanuts.

Not all investors are in a rush to splurge on AI security in its current state, and far from all truly understand what the real implications of AI could be. Although almost everyone declares interest in artificial intelligence, most VCs are at the learning stage, not the check-writing stage. They gladly take calls with founders and domain experts to absorb as much as possible but are not ready to move forward with due diligence, and for a good reason: at this point, it’s unclear whether LLMs can be monetized and what moat can LLM-powered companies build around their offerings. As one funny video mocking the LLM hype among VCs points out, "That's the thing about generative AI, it's always generating new ideas...it's not generating any revenues though."

Looking at the VCs’ and founders’ excitement about the applications of AI in security

Using AI to solve fundamental security problems

What makes me even more excited about LLMs is the opportunity to use advancements in artificial intelligence to solve fundamental security problems. There are plenty of examples of how some startups are leveraging AI to solve hard challenges; below are just a few I am personally familiar with.

The most obvious first step in leveraging LLMs is to find ways to augment security teams. Dropzone AI mimics the techniques of elite analysts and autonomously investigates every alert without the need for playbooks or code. Here is how the company website describes the workflow: “After receiving an alert, Dropzone connects and swivel-chairs across your fragmented security tools and data stack. It tirelessly locates, fetches, and feeds relevant information to its LLM-native system. Dropzone’s cybersecurity reasoning system, purpose-built on top of advanced LLMs, runs a full end-to-end investigation tailored for each alert. Its security pre-training, organizational context understanding, and guardrails make it highly accurate. Dropzone then generates a full report, with conclusion, executive summary, and full insights in plain English. You can also converse with its chatbot for ad-hoc inquiries”.

Another startup that tackles the problems of SOCs is Salem Cyber. The company’s product automatically investigates all cyber alerts that the security team’s existing detection tools produce, using AI-backed software. They use existing tools in the customer's environment to only escalate real threats. The core idea is that detection technology can produce an overwhelming number of cyber alerts that should be investigated, but only a few actually matter. Salem Cyber sees its mission as helping security teams focus on what matters.

The security operations center isn’t the only place where autonomous AI agents can change the way security teams do their job. Nullify, for instance, is working to reimagine product security from the first principles and enable every engineering team to access world-class security engineering expertise. This means augmenting security engineers and doing tasks that previously had to be done by humans. This is differentiated well from “copilots” that depend heavily on Q&A-style interactions to achieve an outcome that could be time-consuming, whereas their goal is to have Nullify operate as an “agent” of the security team, in the way a security team may get staff augmentation from an outsource or consulting firm.

Neither of the companies sees their goal as replacing people. Instead, they recognize that 1) a lot of the manual tasks security analysts and engineers are doing today can and should be automated, and 2) companies that cannot afford to hire security analysts and engineers still need someone to do security.

A lot of interesting innovations are happening in the services space. AirMDR is proposing a new approach to managed detection and response (MDR), powered by a Virtual Analyst capable of handling 80-90% of tasks in security operations by combining advances in AI, automation, and decades of human cybersecurity expertise. I find AirMDR’s approach particularly promising as AI, and in particular LLMs have the potential to automate tedious parts of security, offer customers transparency into the work of service providers, and most importantly, enable service providers to break from linear economics of scaling. If service providers will no longer be forced to scale their teams linearly, it creates opportunities for higher margins, higher exit multiples, and subsequently, a new age for service providers. On the other hand, given the enmeshment between products and services, it is also entirely possible that AI and LLMs will enable product companies to build custom detections for individual customer environments based on the context available in these environments, and having a chat customers can interact with could address the responsiveness problem of large vendors. Whatever happens, I am confident the line between products and services in security will continue to blur.

There are a whole multitude of ideas and approaches enabled by the advancements of AI that would not have been possible a year or two ago. Every month, I meet smart, ambitious founders looking to make a dent in the industry, and choosing machine learning, AI, and LLMs and a way to do it. Joshua Neil & team, for example, are taking yet another approach to combining security, data science, and AI to help companies achieve their security outcomes. And, StackAware has become one of the most active service providers tackling AI security risks.

When it comes to foundational security problems, I think customers won’t be looking for AI but for tools good enough to solve their problems well. The “good enough” part is the key here. While many founders are excited about improving detection accuracy by 0.001%, most buyers don’t have the tools to assess these kinds of improvements, aren’t sophisticated enough to want them, and are right to question why that would matter when they are still grappling with asset discovery, patching, vulnerability management, and other basics. What follows is that customers won’t be looking to switch from an “old-gen” tool to the “AI-enabled” one if what they would be getting is a 5-10% improvement in their capabilities. The new tech has to be substantially better for buyers to consider switching.

Staying realistic about the applications of AI in security and how it is going to reshape the market

In the context of AI for security, security leaders and practitioners are rightfully excited about how the advancements of artificial intelligence can help solve many of the foundational problems in the industry. That said, we need to remember that generative AI is not a panacea to all security and business problems.

As Jon Bagg, Founder and CEO of Salem Cyber rightfully pointed out in one of our chats, in their current state Large Language Models (LLMs) have three primary drawbacks: inference cost, inference speed, and imperfect reasoning. Human-derived chat is one of the potential applications of this technology that is the least sensitive to these three problems. This is because:

The chat can post responses word-by-word and let people read while the model is still doing its work. This flow and the fact that humans process new information slower than machines create natural buffering and conceal the fact that ChatGPT can take a while to write responses.

The chat format also solves the cost problem because people need to read and think about the response before they put in a new query. Without a natural gating function, the number of concurrent requests can get too high, which would make using the model insanely expensive.

The type of people who interact with generated text fall under the category of “knowledge workers”. They have great experience in gathering imperfect information, assessing it, comparing different sources, and finding what makes the most sense. Because they are used to the fact that information is generally incomplete and imperfect, for them the reasoning gaps of ChatGPT are less of a problem.

ChatGPT was an easy application to put on top of the LLM as it is insensitive to the foundational problems the model has. Once we take LLMs in their current state into the security world, all of a sudden these and other limitations become a gating function for some of the ideas people are hoping LLMs will do.

Fundamentally, there are problems AI can and cannot solve; among those it can solve, some are best suited to be solved by LLMs, and others - by other techniques of artificial intelligence. As the state of LLMs evolves, the market is going to determine what the relative value of their applications in security is, and what the customers will be willing to pay for the advantages they provide.

AI models require a lot of data, and that doesn’t come cheap. It appears that to benefit from LLMs, a problem would need to be big and painful enough to compel people to rely on AI. Take response as an example: it happens so infrequently these days, that the only benefit of solving it with AI would have been an “easy” button triggering automation. Incident response has a speed problem (it needs to happen fast), but doesn’t have a volume problem (it is infrequent). One exception is phishing. If the security team only needed to respond to one phishing attempt per day, phishing would most likely not have become a problem that needed to be automated. Because the volume of emails is so large, there is no other alternative to using AI. No security team can afford to hire hundreds of analysts to manually review all incoming emails and do that in real time, without impacting the work of employees.

Problems that can best be solved by AI are problems that have both speed and scale constraints. Triaging a large number of insights and detections to decide which of them need to be responded to, or reviewing millions of lines of code and suggesting fixes for vulnerabilities and insecure code implementation are perfect problems for artificial intelligence to tackle. Another type of problem that is particularly well-suited for LLMs is problems that require the decision-maker to analyze a lot of information, conduct investigations, summarize findings, and supply the right context for making decisions.

It appears that in the short term, some of the most common applications of LLM in cybersecurity are likely to be centered around summarization, recommendations, and helping people do their work - writing reports, drafting search queries, and writing samples of code, to name a few. Larger-scale automation is probably the next step, but for that, we are likely going to need a new generation of solutions built for the AI-first world. As of today, we are limited by what our existing tooling and infrastructure allow us to do. A Splunk query, for example, has to be exact for it to work and return the data correctly. Any gap in reasoning would break Splunk's ability to return useful data, and with that, impact the level of trust security teams have in automation. It is also worth remembering that running LLMs at scale is not going to be cheap. A case in point is Microsoft Copilot which is going to cost $30 per user, per month, and only be available to Enterprise E3 customers with more than 300 employees.

A lot of the problems we are grappling with today cannot be solved by layering another SaaS tool or an AI model on top of fundamentally insecure, poorly designed infrastructure. Moreover, many of the cybersecurity product categories are fundamentally just features that should exist as part of larger platforms. Having these features powered by AI does not change this reality. Lastly, artificial intelligence does not magically lead to product-market fit or enable cybersecurity startups to scale. Customers are going to be looking for outcomes, not for specific tech. When every security product relies on some elements of AI, it won’t matter to what extent it leverages the technology; the only thing that will matter is the outcomes produced by the solution.

Anticipating the fierce fight between the incumbents and startups in the AI for security space

I have been hearing a few people convinced that because Microsoft, Palo Alto, Snyk, and CrowdStrike, to name a few, have amassed large amounts of data, they will inevitably win in the age of AI. The reality is much more complex.

The incumbents do indeed have several advantages over the new entrants. First and foremost, training models is expensive, and startups would be burning a lot of capital and incurring too much dilution if they all attempt to build their own models. Large corporations, on the other hand, have a lot of money to spend. Additionally, training requires a lot of data which startups don’t have. Models trained on public data alone are of limited use because the same data is available to anyone in the industry; the competitive advantage will come from training models on proprietary real-time data from customer environments. Lastly, established vendors have built solid distribution channels, a factor that in cybersecurity matters more than anything else. It will enable them to plug any new tool into an existing distribution network and just start selling.

On the other hand, we need to be cautious and not fall into the trap of assuming that today’s biggest security vendors are certain to win in the new AI world. When large infrastructure shifts happen, incumbents that were deeply entrenched in the previous way of building products, cannot easily shift their technology stacks and business models to adjust to the new reality. This is why many cybersecurity companies that dominated the on-prem world failed to adjust to the cloud-native environments. It follows that being able to layer a copilot chatbot on top of an archaic tech stack is not the same as building AI-first companies. In my opinion, security copilots are the lowest and the most basic example of what LLMs can enable. It is akin to using Google just to search for “Wikipedia”: still useful, but it’s only a small example of what Google is capable of.

LLMs will place a great emphasis on the importance of good user experience. Large companies have a lot of data but their products which tend to have bad user experience and are powered by legacy stacks will constrain their ability to realize the full potential of artificial intelligence. A lot of the battles in the new AI-first world will be fought in the areas of user experience and technological flexibility, and that’s where startups tend to thrive.

These two ways to look at the same problem highlight the simple truth that we are still too early to be able to see which of these two or any other scenarios are more likely to happen. The investors are in the game of betting on startups over incumbents, so it is no surprise they are bullish on ambitious founders willing to take their bets and make their vision of the world come true.

AI is not a new tool for security companies, nor is securing AI a new problem

It is important to remember that AI is not new to security, nor is securing AI a new problem.

Machine learning (ML) is a subset of artificial intelligence that enables machines or systems to learn and improve from experience. Cybersecurity has been leveraging ML since the early 2010s. Cylance, now a part of BlackBerry, along with Darktrace were some of the earliest adopters of AI, and the latter has built most of its go-to-market around the message of being a “World-leading Cyber AI”. An example of an even more interesting application of AI in cybersecurity is Abnormal. The company identified a problem every security team has been struggling with and used machine learning to solve it well. This enabled Abnormal to become one of the leaders in the email security market.

Source: CyberAIWorks.com, a division of BlueAlly, an authorized Darktrace reseller

Since the applications of AI in security aren’t new, we have been fortunate to accumulate several learnings. First and foremost, we know that security teams are not comfortable with black-box algorithms. Security practitioners are seeking to understand why a product made the decision it did, and which criteria it considered for making the determination that a certain behavior is suspicious. Second, we have learned that security teams are unwilling to delegate control over response to security products. Incident response is highly reliant on context: just because something looks suspicious on an endpoint, the machine cannot be immediately isolated from the network without additional investigation and looking at the broader context. The endpoint could turn out to be a critical server responsible for processing millions of dollars per minute, and an hour of downtime could lead to hundreds of millions of lost revenues. Another learning comes from the developer tools space: when software developers are writing code, they don’t want to see an annoying Clippy-like box of suggestions on how to make that code more secure. These are just a few highlights; people who have been in the industry long enough can certainly share more.

Artificial intelligence as an attack vector is also not entirely new. Companies have been using machine learning to decide which trades to execute on Wall Street, to adjudicate credit applications, and to detect anomalies in MRI, CAT, and X-ray scans, to name a few. With the rise of LLMs, the attack surface is rapidly expanding beyond machine learning, computer vision, and the like, but we should already have a good body of knowledge about securing algorithms and ML models to rely on.

The biggest problem today is education about AI

The biggest challenge today is education about AI, how it works, and the risks it possesses; this is especially the case with generative AI and Large Language Models (LLMs).

As Caleb Sima, AI Safety Initiative Chair at Cloud Security Alliance, explains at the Cloud Security Today podcast with Matthew Chiodi,

“Today, the biggest worry you hear about is CISOs shutting off access to OpenAI and blocking it completely, banning all employees from using ChatGPT. Why? Because of the fear that they are going to take all of this confidential data, shove it in OpenAI, and ask questions about that data. That data then goes to Open AI which will re-use it in its training model so that some attacker can then extract the company data out through the LLM. This is the fear. For this specific thing, I absolutely think it’s a low risk. LLMs don’t work this way. A lot of people think it’s like a data store: you throw a bunch of training data in a data store, then you ask [a chat] and it directly takes the data from the store and spits it out. LLMs don’t work that way. They are generators, they work off prediction. It’s all about what word is most likely to follow the next word.”

An unclear understanding of what risks are real and which ones are either impossible, highly theoretical, or extremely unlikely, makes it hard for customers to grasp what controls they should be putting in place. This knowledge gap is going to have direct consequences on the adoption of security products. I anticipate that similar to how it was with the adoption of the cloud, most companies will take time before they start to build their own AI models. Although all the challenges surrounding AI adoption in the enterprise will eventually have to be solved, compliance is almost inevitably going to be the one that gets tackled first. Already today, the growing number of regulations is making it clear that companies will be required to check the boxes and show that they comply with ever-expanding requirements well before they understand the new technologies enough to put actual defenses and guardrails in place to secure them.

Closing thoughts

When looking at AI in the context of security, it’s important to keep in mind two angles: AI for security, and security for AI. When it comes to security for AI, we must acknowledge that as of today, we are all still waiting for the emergence of solid foundations of the AI infrastructure. The rate of innovation is insane: every day, the very fundamentals of what we thought was possible, change. When it comes to leveraging AI to solve fundamental security problems, we are right to be excited. Yet, it is important to be mindful that AI and Large Language Models are not panacea to all security problems. Remember when all we have is a hammer, everything looks like a nail. The good news is that we have accumulated a large number of tools in our toolboxes beyond just LLMs, so it’s a matter of keeping that in mind as we go about building the future of security.

As humans, we tend to overestimate the impact of new tech in the short run and underestimate it in the long run. I think that to realize the full potential of AI, we need to free our minds to think big - bigger than copilots, and certainly bigger than using LLMs to generate search queries for our legacy solutions.

“Fundamentally, they have two paths ahead: to pick a vision of the future they believe in and build for that vision, or to design a very flexible solution the value of which will remain strong regardless of how the future unfolds.” <- This is exactly the issue many security vendors are going through at the moment: how to secure something that’s not standard. They are still unable to fully secure IoT because of that, needing to mainly resort to allow/block practices only. Great article Ross!

Ross, great article. You touched on so many different important aspects, but especially leveraging AI for solving security problems, "The “good enough” part is the key here." and UX as decision component resonated the most with me. I don't know how often I am still telling people that are either dissappointed or excited about an interaction with ChatGPT that the result was to be expected because their topic is either one that is likely to be represented in the training data or not. I think the near-term business models really depend on identifiying a problem that fits to AI capabilities. Only then big corporates will be more prone to invest in longterm developments.